Some years ago the editor of this Newsletter complained of the over-use of the description “artificial intelligence” in legal products: “hyping AI is unhelpful”, he said. “AI is just what computers do.” And he’s not alone in expressing scepticism about the often extravagant claims of AI. Much of the mystique around it stems from simple ignorance. As the saying goes, “It’s only AI when you don’t know how it works; once it works, it’s just software.”

But while there is undoubtedly a lot of hype about AI, a lack of knowledge about how it works is often entirely excusable. That’s because the more genuinely artificial the intelligence of a process, the harder it is to explain or interrogate it. It operates inside a black box. You can see what goes in, and you can see what comes out, but you can’t break open the box to see how and why it arrived at that result.

In some contexts this can seem quite sinister. AI models that assess the risk of reoffending for bail may depend on biased input data, thus producing a biased result. And there’s been ominous talk of robot lawyers and cyber judges which does seem a tad hyped. Like all software, AI is just a tool and in most cases what it supplants is routine drudgery, not added-value lawyering.

ICLR.4 implements Case Genie

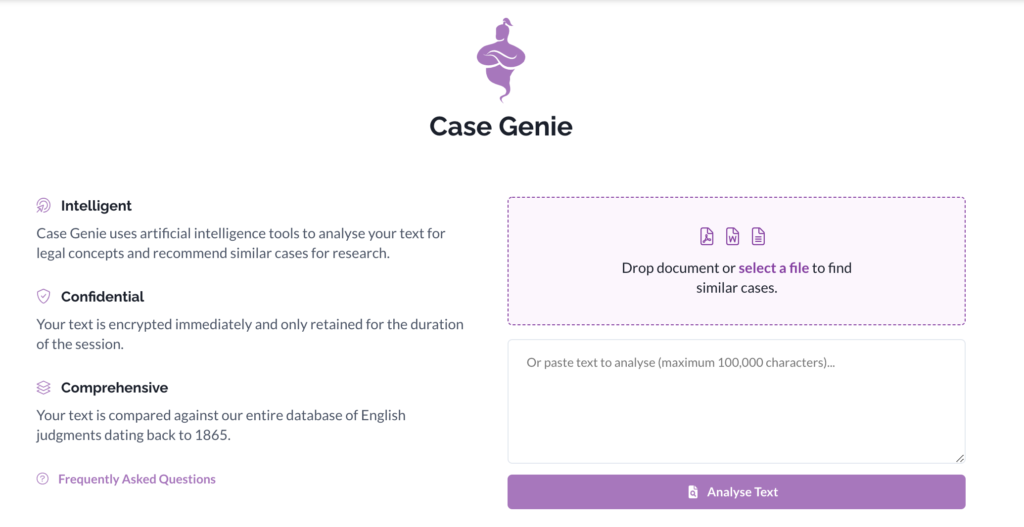

All of this has made me quite cautious in using AI to describe the standout new feature of ICLR.4, the latest upgrade to ICLR’s online case law platform which launched on 1 November 2021. Case Genie uses a branch of AI known as natural language processing (NLP) to analyse an unstructured legal document or text uploaded by the user, and then to suggest the most relevant cases dealing with its subject matter.

What sort of content can be uploaded? The typical use case would be a skeleton argument, to see if a potentially helpful case had been overlooked. Another might be to update a journal article or check the state of the law for a current awareness update.

The similarity pipeline

The input text is first encrypted, because we know that lawyers are concerned about confidentiality. For the same data protection reasons, we have relocated our servers to the UK. Moreover even the uploaded document (or supplied text) is deleted as soon as the pipeline completes; only output data (encrypted) is kept and only for the duration of the search session.

The text is then passed through a pipeline of processes, during which it is tokenised – broken down into sentences and individual words, and parsed for parts of speech and dependency. Entities such as case names, citations, courts, and legal concepts and ideas are identified and isolated.

We then use a classification library program called fastText to look up and assign to each word vectors from a model which we created using the vast corpus of our own existing content – hundreds of thousands of case reports and judgments dating from 1865 to, basically, yesterday. Each vector consists of a value and a direction. Think of it as a location plotted on a two-dimensional graph: then expand the number of dimensions to 600. For us muggles, it can be quite hard to think of this idea in anything more than three dimensions. But, with 600 dimensions, what it means is that each word is assigned an “embedding” comprising 600 vectors. Those vector readings are then used to compare the input text or document with our existing corpus, to find and suggest the most closely matching cases in terms of subject matter, in both fact and law.

We are not the first to do this: there is a company called CaseText in the United States who have done something similar for American law. Their new WeSearch tool uses 768 vectors but it does so against a far greater range of content, which presumably justifies those extra dimensions of embedding. But we (or to be accurate our brilliant developers, 67 Bricks) developed our own version, adapting the existing open source programs and libraries to the demands of our own particular type of content, ie English case law. We used fastText to assign the vectors and a library called FAISS (Facebook’s library for similarity search for very large datasets) to manage the similarity comparison process. (For a more detailed explanation, see Understanding FAISS … And the world of Similarity Searching by Vedashree Patil on Towards Data Science, and English word vectors on fastText.)

In this way, Case Genie is able to suggest up to 50 similar cases, among which to search or filter to find the most relevant, or undiscovered or potentially overlooked cases.

Linked results

To these can be added a further set of results, consisting of those cases linked by way of citation to the initially suggested cases. Because judgments cite other judgments, we are able to create a network of interlinked cases.

We use an algorithm called HITS to assign two values to each case: a “hub” score and an “authority” score. The algorithm is iterative and uses the hub scores of the previous iteration to calculate an iteration’s authority scores, and the authority scores of the previous iteration to calculate an iteration’s hub scores. The authority score of a case is determined by how many later cases cite it in their judgments (using their hub scores). The hub score of a case is determined by how many earlier cases it cites (using their authority scores).

We use the network of cases and the authority scores to find further relevant cases based on the cases already found and suggested on the basis of their similarity or relevance to the input text, as described above. The justification for this is that there may be authoritative cases that are not similar to the uploaded document, but may still be relevant to the problem.

Assessing and optimising results

The user has the option of priming their search using either the suggested cases (based on similarity) or the linked cases (based on citation) — or both. Results by can be ranked by similarity alone or relevance (which combines this with a number of other facts, including how closely linked they are).

Each result listed will show some indication of the subject matter of the case, either from its indexed catchwords or by quoting from the opening of the judgment. Where the case has been cited or considered in a later case, there will be colour-coded “signal” as to its status and frequency of citation.

Where cases have been cited in the input material, these are extracted and listed in a separate report, which shows their current status and checks citations against our own case information index.

As with many computer processes, the better the input material, the more focused it is, the better the results will be. Because of the way it works, as a black box system, it is not possible to explain why any particular case result has been suggested. Hence the hint of mystery in the name. We picked the name “Genie” because we hate robots. The idea of a cute robot coming up with helpful suggestions was anathema because it’s overused and because it generates a false expectation of transparent logic. Black box systems are by definition not susceptible to transparency and their logic cannot always be interrogated or cross examined. It is what it is. But until you’ve tried it, you won’t know what you might be missing.

Other features

When reading case reports, a new “Similar Paragraph” feature enables the user to click on any numbered paragraph in a judgment and be shown links to paragraphs in other cases that discuss similar cases, legislation or subject matter.

A newly designed Case Info display, shows an expanded range of index data, including cases citing or cited by a case, with a table of contents to help navigate. Links to and from the electronic edition of Blackstone’s Criminal Practice and Blackstone’s Civil Practice are now included in an expanded Commentary section.

A new browse feature enables users to find recent cases by legal topic and subject matter catchword headings (using the same taxonomy as our printed indexes). You can now also browse by court, as well as by law report series and volume.

Finally, thanks in part to a vast improvement in the quality of data available from the National Archives, we have a much improved legislation service, which includes a new “timeline” feature enabling the user to select and view the version of a statute in force as at a particular date.

In conclusion, we are confident that even without waving the AI flag, when version 4 of ICLR Online launches on 1 November we will have delivered a major upgrade to our online delivery of content. Indeed, we are no longer just delivering content, but legal data services more generally.

Paul Magrath is Head of Product Development and Online Content at the Incorporated Council of Law Reporting. Email paul.magrath@iclr.co.uk. Twitter @maggotlaw.

Photo via PxHere.