The term “big data” essentially refers to very large sets of data, as well as the processes used for capturing, analysing and extracting value from these data sets. An often-quoted definition of big data is Gartner’s 3 Vs: “Big data is high-volume, high-velocity and/or high-variety information assets that demand cost-effective, innovative forms of information processing that enable enhanced insight, decision making, and process automation.” Discussions around big data often focus on public benefits (such as crime prevention or health research) or its value to business (such as upselling and recommendations engines). Big data techniques can help people to spot general patterns or trends (often with the use of visual displays) but can also be applied on an individual targeted level.

A clear example of big data use is the Facebook advertising model which delivers highly targeted adverts using the vast array of information freely provided by its users such as relationship status, political and religious views, age and current mood (which might even be capable of being manipulated by Zuckerberg et al). Quite a different, non-commercial example of visualised big data is this interactive crime map of England which uses big data from the police and Ordnance Survey.

Data protection and privacy

There has been a huge overall surge in data capture as a result of the increasingly ubiquitous online presence of individuals whose personal details are harvested whenever they make an online purchase, sign up to a service or download an app. The amount of data will only grow as the Internet of Things (IoT) permeates our lives and, as big data lays down its roots in the everyday lives of citizens, data protection and privacy laws will continue to develop and adapt to address new questions. Lawyers will need to advise their clients on staying compliant and reducing risk in the light of new developments such as the EU-US Privacy Shield and the General Data Protection Regulation (GDPR).

The potential for businesses to be fined €20 million or up to 4 per cent of their worldwide turnover for serious breaches of the forthcoming GDPR provide a sobering example of just how seriously businesses need to take data protection issues. One of the main problems with data protection law at the moment is not only lack of awareness and understanding of existing obligations but in staying abreast of the latest changes in this fast-developing area. Most people outside the data protection community are probably unaware of the rocky waters cast by the Schrems judgment in destroying Safe Harbor and its impending replacement with Privacy Shield. So it’s usually down to lawyers to ensure their clients are complying with the latest regulations and not putting themselves at risk – and the bigger the data, the bigger the risk!

Data ownership and intellectual property

Ownership of data is often a prerequisite to extracting its value using big data techniques. But establishing just who “owns” any particular data is often quite tricky – if Facebook serves you with maternity wear adverts because you posted that you are pregnant, they are clearly monetising your data but you cannot (currently) claim a percentage of this revenue. Furthermore, any intellectual property rights are more likely to be vested in the databases and database analysis software rather than the data itself. As well as understanding who owns private data, it’s also important to establish the ownership of public data and permissible uses, as governments gradually release various types of public sector data.

Opportunities

Joanne Frears, Partner of IP, Technology, Innovation at Blandy & Blandy, argues that “Although most law firms seem to consider big data a techie subject and thus not suitable for inclusion in strategic discussions, this is hugely short sighted. We are not far off a time when anyone conducting an average Google search can discover more about a lawyer’s client than the client’s lawyer [can discover using their own systems] because CMS software is not fit for purpose or because operators are incapable of interrogating it!” In other words, although many firms already hold large amounts of data on their clients, the methods of analysing or capturing useful information from this big data are often ineffective.

Joanne also alludes to the emerging field of predictive coding in e-disclosure, as well as the potential for big data to help guide lawyers or, as suggested by the Gartner definition, automate certain processes: “There are already near-future applications of big data to risk management, evidence and artificial intelligence in case conduct … add to this that big data offers massive opportunities for productisation of lawyers skills and knowledge – for better or worse – and lawyers without an eye on big data uses in the law risk being blindsided.”

Legal processing and predictive coding

Predictive coding is essentially a way of using software infused with an element of artificial intelligence (using this term loosely) to automatically sift through large volumes of documents in order to speed up the disclosure process. Although it has been used in America for several years, the High Court only very recently officially approved the use of predictive coding in the case of Pyrrho Investments v MWB Property. It’s a good example of applying a big data technique to solve a big data problem.

The automation and AI aspects of predictive coding are cropping up in many discussions about the future of the legal profession and fears have been raised that the technical tail may start to wag the legal dog or replace it altogether. Certainly in terms of big data, lawyers who can harness the right software tools will have an advantage over their technologically cautious rivals, both in terms of time savings and risk management. One product worth mentioning in this regard is Lex Machina which offers a specialised “legal analytics platform” to help lawyers decide on the best strategies to adopt in any specific case by analysing the vast reams of information on outcomes in previous similar cases and predicting case outcomes based upon patterns or trends. If this is all beginning to sound a little like chess (and analogous to Deep Blue beating Kasparov) it’s worth remembering that even the most sophisticated software is still programmed by humans!

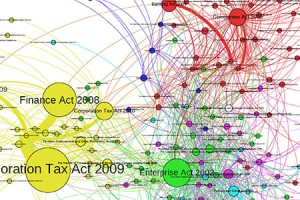

Making better law

One of the aims of the Big Data for Law project is to trawl through the statute book and look for patterns, thereby identifying “useful and effective drafting practices and solutions that deliver good law.” According to John Sheridan, digital director at National Archives which has been tasked with delivering this project, the “big data revolution provides us with a real opportunity to understand how the statute book works, and to use those insights to deliver better law”. So, aside from posing new legal problems and helping lawyers apply existing law, big data can even potentially be used to create or update the law itself.

Further reading

European Commission: EU-US Privacy Shield FAQs

Internet Newsletter for Lawyers: Big Data for Law

LexisNexis: Predictive Coding

Computers & Law: Agreement on GDPR

Wall Street Journal: Artificial Intelligence May Reduce E-Discovery Costs

Wired: Who owns your data?

Alex Heshmaty is a legal copywriter and journalist with a particular interest in legal technology. He runs Legal Words, a copywriting agency in Bristol. Email alex@legalwords.co.uk. Twitter @alexheshmaty.